Why Now? Why AI?

Learning and development is in the middle of a fundamental shift. Not long ago, L&D teams were seen as order takers—build the course, deliver the request, move on. That model no longer works. Today’s L&D leaders are expected to act like performance consultants by validating needs and ensuring learning is truly the right solution.

At the same time, organizations are moving to skills-based hiring and development. It’s not about general training anymore; it’s about building specific skills that drive internal mobility and measurable outcomes.

Content itself is also changing. AI will soon personalize learning based on each employee’s history, performance, and goals, which is far beyond what traditional instructional design can deliver. L&D’s role is shifting from creating every asset to architecting experiences, training AI systems, and reviewing outputs for accuracy and impact.

AI isn’t a distant trend. It’s here, and it’s redefining how learning teams work. A year from now, the expectations on L&D will look very different, and the time to start preparing for that reality is now.

The Promise of AI for Learning

AI doesn’t replace your L&D team—it amplifies it.

It’s more than a productivity hack—it’s a strategic advantage. According to Brandon Hall Group, 89% of organizations expect AI to transform learning and development in 2025. Fosway reports that 64% of companies are adopting AI to boost learning efficiency and 63% to improve effectiveness. That’s not hype—that’s momentum.

Here’s what AI makes possible:

- Content in minutes—drafted at scale

Generate structured course drafts from product documentation in a fraction of the time. - Adaptive learning paths calibrated to each learner

Use behavioral signals—like responses, assessments, and engagement—to deliver personalized journeys. - Coaching simulations that reflect real-world scenarios

Create dynamic role-plays for sales, customer support, or leadership, specific to roles and regions. - Smart automation of engagement and administration

Trigger reminders, follow-ups, and reporting based on learner behaviors, while freeing up your team’s bandwidth. - Predictive insights tied to business outcomes

Use AI analytics to identify at-risk learners, measure the ROI of programs, and prove the impact on performance and retention.

This is where L&D is headed. The advantage is clear when AI is understood and implemented strategically.

Understanding the

Language of Learning AI

Let’s be honest: most of us in learning and development didn’t get into this field to write algorithms or debug code. We’re creators, facilitators, and connectors. That’s why AI can feel intimidating—like the future of our field is getting very technical, very fast.

The good news: You don’t need to be a data scientist to understand how AI can help you. You just need a shared vocabulary and a sense of how these tools support the work you already love doing. Here’s a primer.

Artificial Intelligence (AI)

Think of AI as a smart helper. It’s technology that can perform tasks we’d usually expect a human to do, like recognizing speech, spotting patterns, or making recommendations.

In L&D: AI can suggest the right course to the right learner at the right time, or flag when someone is falling behind so you can step in.

Artificial Neural Network

A neural network is the architecture inside many machine learning systems, including large language models, that mimics the structure of the human brain, at least loosely. It’s made up of layers of artificial “neurons” that pass signals between each other, gradually learning how to make predictions, recognize patterns, or generate responses.

In L&D: Neural networks help power adaptive learning paths by analyzing learner behavior over time—such as quiz performance or content engagement—and adjusting what’s shown next to support mastery and retention.

Machine Learning (ML)

Machine learning is the subfield of AI where computers learn by example, not by explicit instruction. Instead of being hand-coded with every rule and outcome, these systems are fed mountains of data and taught to recognize patterns on their own. Think: spam filters that learn what junk mail looks like based on millions of examples, or training a model to distinguish cats from dogs, without ever writing “if it has whiskers, it’s a cat.”

In L&D: ML can notice that learners with certain roles tend to struggle with a particular topic. Over time, it learns to recommend extra resources before those learners get stuck.

Natural Language Processing (NLP)

NLP is how AI understands and works with human language, written or spoken.

In L&D: NLP powers tools that can analyze learner feedback to detect sentiment or themes, summarize long documents or course content, support chat-based tutoring, or translate instructions and lessons in real time.

Foundational Models

LLMs are just one slice of the pie. Foundational models are a broader class of AI systems trained on enormous datasets—text, code, images, audio—that can be adapted for many different tasks. What makes them “foundational” is that they aren’t built for just one thing. Instead, they provide a base (a foundation) that developers can tweak and repurpose, like fine-tuning a generic model to specialize in medical diagnostics, or training it to navigate compliance-heavy enterprise learning environments. These models are big, flexible, and powerful.

In L&D: Foundational models can power AI copilots that assist instructors in building courses faster, personalize content recommendations for learners, or automate tagging and organizing learning materials across formats—text, video, slides—without needing separate tools for each content type.

Large Language Models (LLMs)

Large Language Models, or LLMs, are a type of AI system trained to understand and generate human language. Built on neural networks—software structures loosely modeled after the human brain—LLMs learn by ingesting vast amounts of text: books, articles, code, chat logs, corporate wikis, and everything in between.

They don’t memorize facts like a database. Instead, they use machine learning to detect complex patterns in how language works—how words flow together, how context shapes meaning, how tone shifts with audience. Through this process, they develop the uncanny ability to write essays, answer questions, translate languages, write code, and even tutor learners in specific domains.

In L&D: LLMs are becoming the foundation for adaptive content creation, AI-powered coaching, and highly personalized learning journeys.

Generative AI

Generative AI is the buzzword that launched a thousand startups, and it’s more than just hype. It refers to any AI system that can create new content: text, images, music, video, even code. That includes everything from ChatGPT to DALL·E to Sora.

At its best, generative AI turns your subject matter experts into editors, not authors, freeing them up to focus on strategy, nuance, and impact.

In L&D: Instead of starting with a blank page, you can ask generative AI to draft a course outline, create quiz questions, or even build images for your slides.

AI Assistant

An AI assistant is a conversational interface built on top of a powerful language model (think: GPT-4, Claude, or Gemini) that interacts with users in natural language to help them get things done. Unlike old-school chatbots that followed scripted flows, these assistants can reason, ask clarifying questions, and adapt their responses based on real-time context.

In L&D: In the enterprise learning world, AI assistants are becoming the connective tissue between learners and content. They can explain a policy like a human trainer, quiz you like a coach, or draft an onboarding plan in seconds.

AI Agent

An AI agent is more than a helpful assistant; it’s a goal-driven system that can plan, take actions, and adapt with minimal human input. While assistants respond to prompts, agents work toward outcomes, like completing tasks or coordinating with other systems.

In L&D: AI agents can take a learning goal and run with it—pulling source material, drafting modules, scheduling reviews, and flagging gaps—automating the heavy lifting behind course creation and delivery.

Frontier Models

Frontier models are the most advanced AI systems—like GPT-5 or Claude 3 Opus—designed for complex tasks such as reasoning across documents or interpreting images. They’re fast, powerful, and capable of near-human performance in some areas.

In L&D: Frontier models can power immersive, multimodal learning experiences, but their sophistication also calls for clear guidelines around privacy, bias, and responsible use.

AI in Enterprise Learning:

Assistants vs. Agents vs. Automation

The Human–AI Balance:

Levels of Learning Design Automation

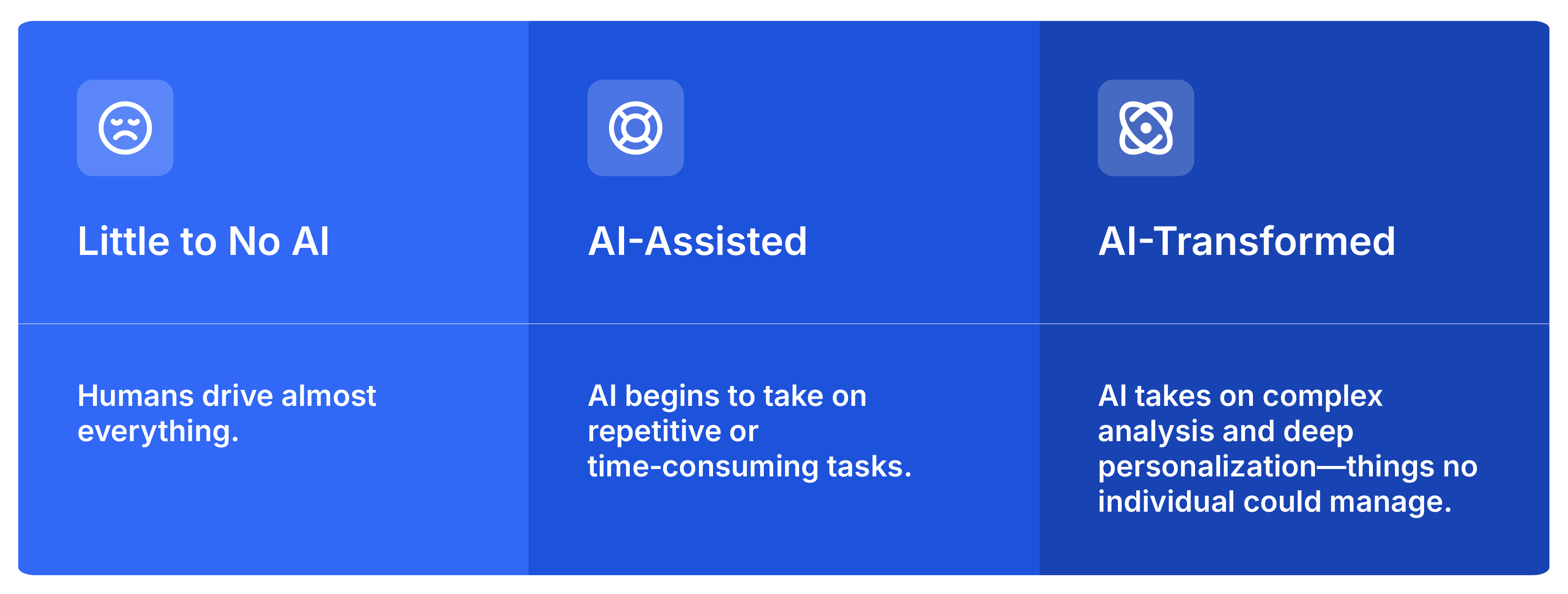

AI in learning isn’t an all‑or‑nothing switch. It’s a progression—a journey from today’s mostly human‑led workflows toward a future where AI handles more of the tasks that either

- Don’t need a human touch (like tagging hundreds of pieces of content), or

- Aren’t humanly possible (like analyzing thousands of learner interactions at once).

We’re not there yet. AI isn’t ready to replace entire teams, and transformation on this scale takes time because technology evolves, organizations adapt, and people learn new skills. But we can’t wait until the future arrives to start planning. The decisions you make today will shape how ready your team is for what’s coming.

On the left side of the scale:

Humans drive almost everything. You design content, build activities, assign learners, and track results. AI might offer light assistance—like suggesting a title or filling in metadata—but you’re still in control.

In the middle of the scale:

AI begins to take on repetitive or time‑consuming tasks. It might generate quiz questions, recommend learning paths, or send personalized nudges. You’re still setting strategy and reviewing outputs, but you’re no longer doing all the manual work.

On the right side of the scale:

AI takes on complex analysis and deep personalization—things no individual could manage. It can combine performance data, prior completions, and business goals to deliver unique learning experiences for each person. At this level, your role shifts. Instructional designers become learning experience architects: professionals who guide and orchestrate AI systems to ensure they align with strategy, standards, and ethics rather than simply building every asset by hand.

And this is where leaders need to look ahead. While AI will amplify many roles, it will also redefine and, in some cases, replace specific tasks entirely. That’s not a threat; it’s an opportunity to reshape your team around higher‑value, strategic work.

The transformation won’t happen overnight. But the organizations that are preparing now—by rethinking roles, piloting new processes, and building AI literacy—will be the ones leading, not scrambling, when AI reaches its next milestone.

Why This Matters and Why You're Still Essential

AI isn’t here to take away what you love about your work. It’s here to take away the toil—the hours spent reformatting content, chasing reports, or rewriting similar modules over and over. It gives you back time to focus on what you do best: designing meaningful learning experiences that empower people to develop the skills they need to grow.

AI also opens doors to things we simply couldn’t do before. A person can only track so many learners at once. AI can track thousands. A person can only customize content so far. AI can tailor every module to every learner.

The future of L&D isn’t less human, it’s more strategic. Your creativity, judgment, and ability to guide the learning journey are more important than ever. AI is simply the engine that will help you deliver at a scale—and with a level of personalization—we’ve never seen before.

Moving from Microefficiencies to Transformation: A Structured Approach to Applying AI in Learning

When most L&D teams first experiment with AI, they start small. They use AI for microefficiencies, like generating quiz questions, summarizing learner feedback, or auto‑tagging content. These wins are valuable. They save minutes, reduce friction, and give you a taste of what’s possible.

But AI’s real potential emerges when you step back and rethink your workflows entirely. Instead of just doing the same work faster, you can rearchitect how you work—build adaptive learning paths, automate processes at scale, and deliver experiences no human could produce alone. That’s where you save weeks, drive scale, and directly impact business outcomes.

So how do you move from microefficiencies to meaningful transformation? We recommend this six‑phase framework, designed to help you adopt AI thoughtfully, at a pace your team can support.

Phase 1: Identify & Analyze

Start with awareness and a clear-eyed look at your workflows.

- Where do bottlenecks slow you down?

- Where are learners dropping off?

- Which repetitive tasks eat hours of your team’s time?

Map those friction points. That’s where AI can start making a difference.

At this stage, also assess your readiness: Do you have the tools, skills, and governance in place to experiment safely?

Phase 2: Define Objectives

Don’t deploy AI just because it’s available.

Define measurable goals:

- What problem are you solving?

- What learner behaviors need to change?

- Which business metrics do you need to influence?

Whether your goal is faster onboarding, improved product knowledge, or higher partner certifications, clarity here prevents wasted effort and ensures AI delivers impact rather than novelty.

Phase 3: Design & Architect

Once you know your goals, design with intention.

AI can power roleplay simulations, recommend content, or generate dynamic assessments, but solid instructional design still applies: clear outcomes, learner choice, and timely feedback.

This is also where you plan transparency. How will you disclose AI’s role to learners? How will you ensure trust and safety?

Phase 4: Develop & Curate

Here’s where AI rolls up its sleeves:

- Draft modules, visuals, and video scripts

- Build simulations and FAQs

- Generate alternate learning paths

What once took weeks might take hours, but speed doesn’t replace quality.

You’ll still need to review everything for accuracy, tone, and bias. Track what AI created, what you edited, and what you approved. A strong process builds confidence in every deliverable.

Phase 5: Implement & Deliver

Pilot before you scale.

Choose a single course, certification, or simulation. Let learners know what’s AI‑powered and why, then gather feedback and observe how they engage:

- Where do they feel supported?

- Where do they feel confused?

- How do they react to AI‑generated elements?

At the same time, test your operational readiness—integrations, permissions, and support channels.

Phase 6: Evaluate & Iterate

Measure what matters:

- Did AI shorten development cycles?

- Did engagement or completion rates improve?

- Did it reduce support tickets or increase certifications?

Tie your findings to business metrics, then refine your prompts, adjust your workflows, and expand what works.

This isn’t a one‑time rollout. It’s a cycle of learning, testing, and improving, in which microefficiencies become stepping stones toward a rearchitected, AI‑powered learning strategy.

The Risks Are Real And Manageable

AI can feel like magic. You type a prompt, and out comes a polished draft, a set of quiz questions, or an entire learning path. But magic without understanding is dangerous. If we don’t look behind the curtain, we risk putting blind faith in systems we don’t fully control.

For learning leaders, that’s not an option. You’re responsible for experiences that affect careers, compliance, and—ultimately—business outcomes. To adopt AI responsibly, you need to understand the real risks and know how to mitigate them.

The Black Box Problem

Most modern AI, especially deep learning systems, operate in ways that even their creators can’t fully explain. An AI model might recommend a course or write an answer, but why it made that choice isn’t always explained.

[fs-toc-omit]Why it matters for L&D:

If you don’t know how an AI reached its conclusion, you can’t be sure it aligns with your standards or your learners’ needs. A model could recommend content that’s off‑strategy or misaligned with compliance requirements, but you wouldn’t know until it’s too late.

[fs-toc-omit]How to manage it:

Choose AI tools that are inspectable, explainable, and overridable.

- Inspectable: You can see what data or logic the AI used.

- Explainable: The system can tell you, in plain language, why it suggested or generated something.

- Overridable: You can change, reject, or correct outputs easily.

Before buying or implementing any AI solution, ask vendors how they handle transparency and what controls you’ll have in place.

Hallucinations and Inaccuracies

AI can create content that looks polished and confident, but is completely wrong. These “hallucinations” happen because AI predicts patterns, not truth. According to recent industry studies, over 80% of users have seen AI output that contained factual errors, yet the majority said they still trusted it.

[fs-toc-omit]Why it matters for L&D:

If you publish AI‑generated material without review, you risk giving learners inaccurate or misleading information. In high‑stakes programs—like compliance or safety—that’s unacceptable.

[fs-toc-omit]How to manage it:

- Treat AI outputs as drafts, not final products. Always fact‑check and edit.

- Develop a human review process, especially for high‑stakes learning.

- Consider using Retrieval Augmented Generation (RAG) tools, which ground AI outputs in your organization’s own trusted knowledge base.

- Invest in knowledge management: the AI is as accurate as the data you feed it.

Bias in AI

AI learns from data and data reflects human history, complete with all our biases. If the data used to train a model skews toward certain perspectives, so will the AI’s outputs.

[fs-toc-omit]Why it matters for L&D:

Imagine an AI that consistently suggests leadership content featuring men, or that tailors examples in ways that exclude certain cultural contexts. Bias undermines equity and can damage learner trust.

[fs-toc-omit]How to manage it:

- Ask vendors how they address bias in training data and algorithms.

- Build diverse, representative datasets for any internal AI tools.

- Review outputs for patterns that might reinforce stereotypes or overlook key learner groups.

- Keep humans involved in evaluating content for fairness and inclusion.

The Limits of AI Autonomy

AI is powerful, but it’s not infallible. There will always be tasks that require human judgment, creativity, and ethical consideration.

[fs-toc-omit]Why it matters for L&D:

AI can generate and recommend, but it doesn’t understand your learners’ context, your culture, or your business priorities the way you do. Without oversight, it could optimize for speed instead of quality or compliance.

[fs-toc-omit]How to manage it:

Adopt a human‑in‑the‑loop (HILT) approach. Let AI handle repetitive tasks and data analysis, but keep people responsible for decisions that affect outcomes, equity, and culture. AI should be a collaborator, not a human replacement.

Practical Steps to Stay in Control

- Ask the right questions: Is the AI explainable? How is it tested? Can you override it?

- Pilot before scaling: Start with a low‑stakes program. Test, review, and learn.

- Track and audit outputs: Document what AI generated and what was edited.

- Invest in your knowledge base: Ensure the AI has access to accurate, up‑to‑date information.

Train your team: Prompt engineering, review processes, and governance are new skills L&D will need.

Questions to Ask: Before You Buy AI

When you’re ready to evaluate AI‑powered learning platforms, don’t stop at, “Do you have AI?” Ask questions that uncover how the product works, how it will fit into your ecosystem, and how it will help you deliver results, not headaches.

Start with your own strategy

- What problem am I trying to solve? Is this AI helping me address a specific pain point, or is it just a shiny demo?

- How will this affect learners and admins? Will it enhance existing behaviors or require new skills, new processes, or heavy retraining?

- What will it take to manage this AI long-term? AI is only as good as its inputs. How will we keep our content and knowledge base accurate and current?

Then dig into the vendor’s approach

- Is the AI functionality built natively or stitched together from third‑party tools?

- Who designs, trains, and maintains the AI? Is there an in‑house team dedicated to improvement and oversight?

- How do you handle bias, hallucinations, and inaccuracies? Can you show me the safeguards you’ve put in place?

- Can I inspect, explain, and override AI decisions? What visibility will I have into how outputs are generated?

- Is learning central to the AI model? Does it follow proven pedagogical principles, or is it just pattern recognition?

- Where is my data stored and processed? Who controls it, and how is it protected?

- Can I see the AI in action from start to finish? Show me where AI begins, where human oversight comes in, and how the system handles errors.

The Intellum Approach to AI

At Intellum, AI isn’t new, and it isn’t an add‑on.

We’ve been building intelligent capabilities into our platform for years, from early recommendation engines and personalization features to advanced analytics that help our clients understand and improve learner outcomes. What we’re delivering now is the next evolution of that work: a platform rebuilt from the ground up with AI at its core.

Because enterprise learning doesn’t just need an upgrade, it needs a transformation.

Our AI‑first LMS takes on the work that slows you down, so you can focus on the outcomes that matter. From generating course drafts and automating workflows to surfacing real‑time insights, Intellum AI gives creators, managers, and learners the power to do more with less effort.

And we bring more than technology to the table. We bring experience. For over two decades, we’ve partnered with some of the world’s most recognizable brands to deliver high‑stakes learning programs at scale. That deep understanding of instructional design, program management, and analytics shapes how we apply AI: responsibly, strategically, and with measurable impact.

The result is a system that:

- Frees your team from repetitive tasks and accelerates content creation

- Personalizes learning paths using context no human team could process alone

- Keeps you in control with outputs that are inspectable, explainable, and overridable

We guide you through the cultural and operational shifts that make AI stick. With enterprise‑grade security, data ownership, and 24/7 support, Intellum gives you the confidence to embrace what’s next.

Experience the future of enterprise learning today. Let’s talk about how Intellum AI can transform your programs.